RIDGEFIELD PARK, N.J. – MAY 20, 2020 – Samsung Electronics America, Inc. today introduced the Samsung Galaxy S20 Tactical Edition (TE), a mission-ready smartphone solution tailored to the unique needs of operators in the federal government and Department of Defense (DoD). With a highly customized software and feature set, the Galaxy S20 TE can operate seamlessly with a range of existing peripherals and supports the requirements of tactical and classified applications, especially those designed to help operators navigate complex terrain, expansive distances, and the potential loss of communication with command units. Galaxy S20 TE also introduces DualDAR architecture, which delivers two layers of data encryption based on the NSA standards to secure up to top-secret level data for classified missions.

“The development of this solution is a result of coordination and feedback received from our Department of Defense customers and partners,” stated Taher Behbehani, Head of the Mobile B2B Division, SVP and General Manager, Samsung Electronics America. “The Galaxy S20 Tactical Edition provides the warfighter with the technology that will give them an edge in the field, while providing their IT teams with an easy-to-deploy, highly secure solution that meets the demands of their regulated environment.”

Galaxy S20 TE offers federal program managers and executive officers an easy to manage and deploy mobile solution that works with a broad range of technologies and is backed by the assurance of the defense-grade Samsung Knox mobility platform. It harnesses the most sought after tools of Samsung’s premium Galaxy devices in a unique, easy-to-use configuration.

•Helps Operators Stay Connected in Multi-domain Operations. Galaxy S20 TE easily connects to tactical radios and mission systems, out of the box, ensuring seamless operations. Multi-ethernet capabilities provide dedicated connections to mission systems, while network support for Private SIM, 5G, Wi-Fi 6 and CBRS ensure a connection is maintained throughout multi-domain environments.

•Provides complete, accurate real-time situational awareness. Galaxy S20 TE caters to the unique needs of military operators, through customization of numerous device features. A night-vision mode allows the operator to turn display on or off when wearing night vision eyewear, while stealth mode allows them to disable LTE and mute all RF broadcasting for complete off-grid communications. Operators can easily unlock the device screen in landscape mode while it’s mounted to their chest, and quick launch their most commonly used apps at the push of a button.

•One Device for All Mission Requirements. When in the field, operators need a lightweight, easy to carry device that doesn’t weigh them down, yet offers the power they need to complete the mission. With its powerful 64-bit Octa-Core processor, Galaxy S20 can support the running of multiple mission applications in the field (ATAK, APASS, KILSWITCH, BATDOK) so operators can access the intelligence they need. Galaxy S20 TE also includes powerful Samsung DeX software, which offers a PC-like experience when connected to a monitor, keyboard, and mouse. With DeX, operators can use the device for completing reports, training or mission planning when in vehicle or back at the base.

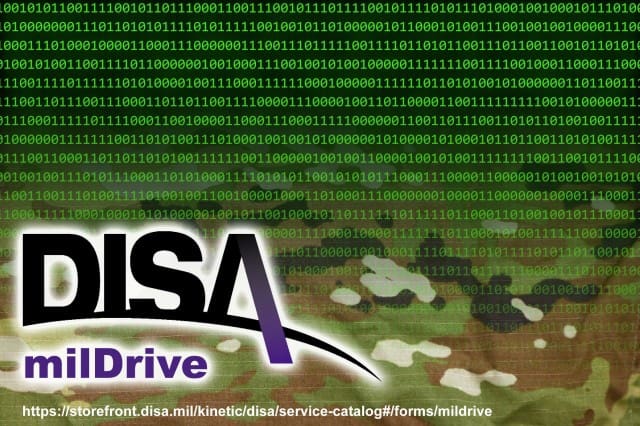

•Certified and secure for Special Operations. Galaxy S20 TE is built on Samsung Knox, the defense-grade mobile security platform that protects the device from hardware through software layers. DualDAR architecture further secures the device with two layers of encryption, even when the device is in a powered off or unauthenticated state. This multi-layer, embedded defense system helps Galaxy S20 TE meet the most stringent regulated industry requirements, including NSA’s Commercial Solutions for Classified (CSFC) Component’s List, and Mobile Device Fundamental Protection Profile (MDF PP) as laid out by the National Information Assurance Partnership (NIAP). Galaxy S20 TE comes out of the box approved for use within the Department of Defense (DoD) using the Android 10 Security Technical Implementation Guide (STIG) as laid out by the Defense Information Systems Agency (DISA).

The Samsung Galaxy S20 TE will be available in Q3 2020 through select IT channel partners. For more information on Galaxy S20 TE, please visit www.samsung.com/TacticalEdition. For more information about Samsung Government, please visit www.samsung.com/us/business/by-industry/government.