Yesterday, several employees at the Pentagon got a pop-up on their computers inviting them to use a new artificial intelligence tool developed for the War Department. Some were skeptical, wondering if the invitation was part of a cybersecurity test.

But by this morning, those concerns were gone — posters around the Pentagon and an email from Secretary of War Pete Hegseth assured everyone that the new tool is not only legit, but that he wants everybody to start using it.

“I am pleased to introduce GenAI.mil, a secure generative AI platform for every member of the Department of War,” Hegseth wrote in the email. “It is live today and available on the desktops of all military personnel, civilians and contractors. With this launch, we are taking a giant step toward mass AI adoption across the department. This tool marks the beginning of a new era, where every member of our workforce can be more efficient and impactful.”

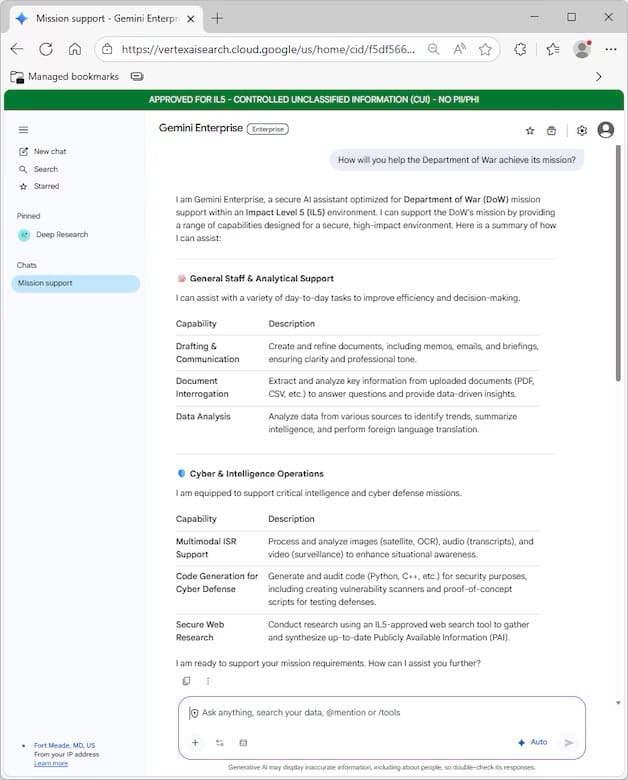

Visitors to the site will find that what’s available now is a specialized version of the Google AI tool Gemini, Gemini for Government. This version is approved to handle controlled unclassified information. A green banner at the top of the page reminds users of what can and can’t be shared on the site.

In addition to Gemini for Government, the site indicates that other American-made frontier AI capabilities will be available soon.

“There is no prize for second place in the global race for AI dominance,” said Emil Michael, undersecretary of war for research and engineering.

“We are moving rapidly to deploy powerful AI capabilities like Gemini for Government directly to our workforce. AI is America’s next manifest destiny, and we’re ensuring that we dominate this new frontier.”

Access to the site is available only to personnel with a common access card and who are on the War Department’s nonclassified network.

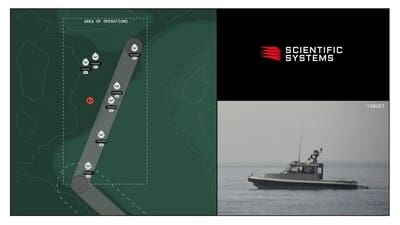

When GenAI was asked, “How will you help the Department of War achieve its mission,” through a user prompt, it replied with a list of capabilities, including, among other things, creating and refining documents, analyzing information, processing and analyzing satellite images, and even auditing computer code for security purposes.

“I can support the DOW’s mission by providing a range of capabilities designed for a secure, high-impact environment,” GenAI replied. “I am ready to support your mission requirements.”

The tool reminds users to double-check everything it provides to ensure accuracy. The highest authority within the War Department, Hegseth himself, provided that validation.

“The first GenAI platform capability … can help you write documents, ask questions, conduct deep research, format content and unlock new possibilities across your daily workflows,” he wrote. “I expect every member of the department to log in, learn it and incorporate it into your workflows immediately. AI should be in your battle rhythm every single day; it should be your teammate. By mastering this tool, we will outpace our adversaries.”

For those unfamiliar with how to use AI, online training is available at genai.mil/resources/training.

By C. Todd Lopez, Pentagon News